This article will provide information regarding the robots.txt file and what it is mainly used for in WordPress.

Why the name Robots.txt?

The file is named Robots.txt, which mainly stems from the word “robot” which can be any type of “bot” that visits websites over the Internet. In other words, they are referred to as search engine crawlers that help index your website in major browsers such as Google for example. The file itself plays a key role in regulating traffic by blocking or allowing specific parts of your website to be accessed by bots.

What is the Robots.txt file used for in WordPress?

The robots.txt file is a simple text file that helps search engine crawlers and other bots understand which pages or sections of a website should be crawled or indexed. In the context of a WordPress website, this file can be used to block sensitive information or automatically generated pages from being indexed.

By using a robots.txt file in WordPress, you can manage generated crawl traffic from different bots, which can help to protect your site and improve its SEO.

Can you generate a Robots.txt file with a WordPress plugin?

The easiest way to create or edit the robots.txt file is through a plugin like Yoast SEO. You will need to log in to your WordPress website.

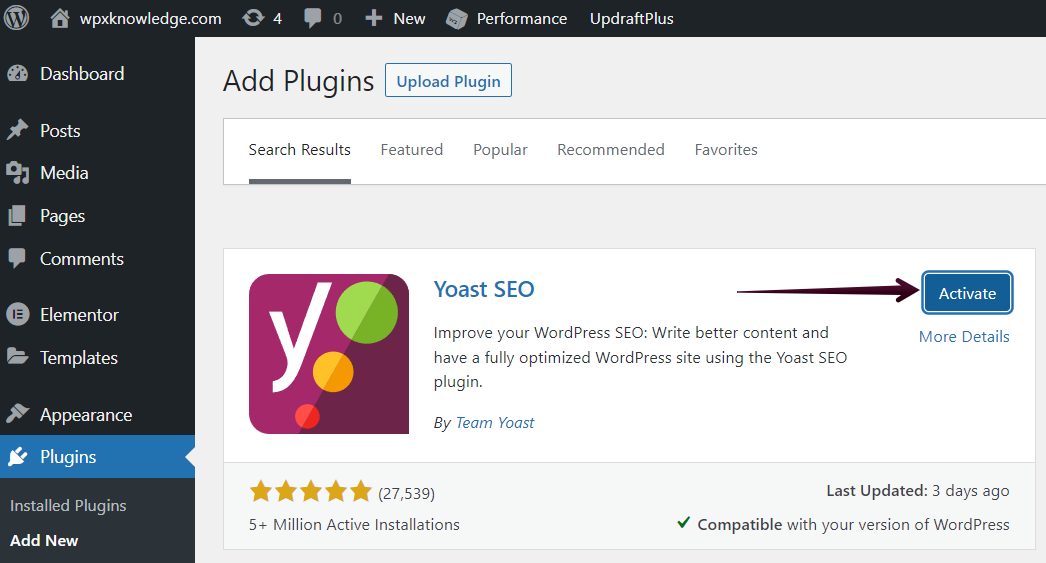

Once you are logged in to your WP Admin, you can go to Plugins (1) → Add New (2). Then enter Yoast SEO into the search bar (3), as shown in the screenshot below. Click on the Install Now (4) button, to begin the installation of the plugin.

After you install the plugin, you need to Activate it.

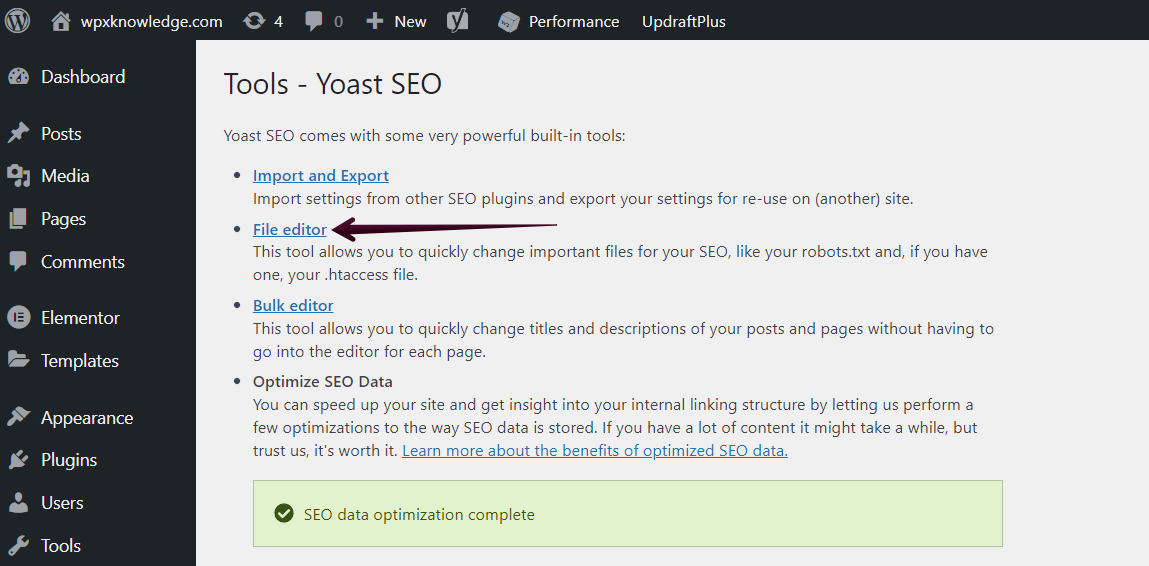

Once installed, navigate through Yoast SEO (1) and choose Tools (2).

You will need to select the File Editor option in order to access the option to generate a robots.txt file.

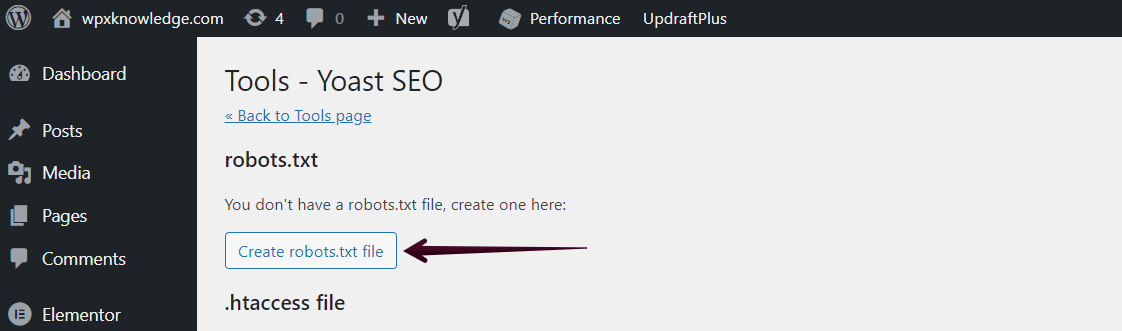

Once selected, you will see an option to Create robots.txt file.

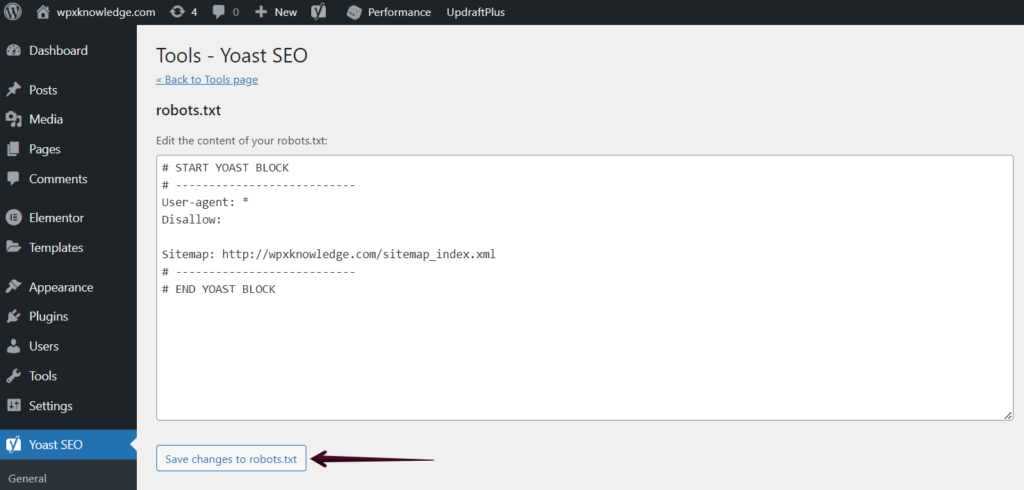

Once generated, you will be able to configure your robots.txt file directly through the plugin settings.

Once the changes are made, you can choose to Save changes to robots.txt.

Do you need Robots.txt?

It is important to distinguish that you do not need a Robots.txt file in order for your website to run and function properly. The reason to have one is if you have a traffic-heavy website and are looking to control the crawling traffic coming from bots, which would mean allowing or blocking certain ones.

In other cases, if you have content that you do not wish to be indexed, then you can use Robots.txt to block bots from crawling that specific part of your website.

Overall, you need to be careful when configuring Robots.txt, especially without the help of a webmaster or online generator with which you can avoid mistakes such as blocking essential bots and hurting your SEO Ranking and traffic altogether.

If your website is simple and you do not have a lot of traffic coming in, the reports from analytics are good, then you do not need to tamper with advanced search engine regulators such as Robots.txt.

If you have any other questions on the topic, don’t hesitate to contact the WPX Support Team via live chat (use the bottom right-hand widget) and they will respond and help within 30 seconds or less.